If ordinary bank ATMs can be made secure and reliable, why can't electronic voting machines? It's a simple enough question, but, sadly, the answer isn't so simple. Secure voting is a much more complex technical problem than electronic banking, not least because a democratic election's dual requirements for ballot secrecy and transparent auditability are often in tension with one another in the computerized environment. Making ATMs robust and resistant to thieves is easy by comparison.

If ordinary bank ATMs can be made secure and reliable, why can't electronic voting machines? It's a simple enough question, but, sadly, the answer isn't so simple. Secure voting is a much more complex technical problem than electronic banking, not least because a democratic election's dual requirements for ballot secrecy and transparent auditability are often in tension with one another in the computerized environment. Making ATMs robust and resistant to thieves is easy by comparison.

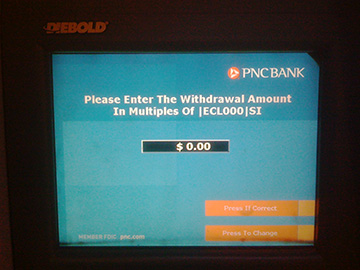

But even ATMs aren't immune from obscure and mysterious failures. I was reminded of this earlier today when I tried to make a withdrawal at a PNC Bank cash machine in Philadelphia. When I reached the screen for selecting the amount of cash I wanted, I was prompted to "Please Enter The Withdrawal Amount In Multiples of |ECL000|SI". Normally, the increment is $10 or $20, and |ECL000|SI isn't a currency denomination with which I'm at all familiar. See the photo at right.

Obviously, something was wrong with the machine -- its hardware, its software or its configuration -- and on realizing this I faced a dilemma. What else was wrong with it? Do I forge ahead and ask for my cash, trusting that my account won't be completely emptied in the process? Or do I attempt to cancel the transaction and hope that I get my card back so I could try my luck elsewhere? Complicating matters was the looming 3-day weekend, not to mention the fact that I was about to leave for a trip out of the country. If my card got eaten, I'd end up without any simple way to get cash when I got to my destination. Wisely or not, I decided to hold my breath and continue on, hoping that this was merely an isolated glitch in the user interface, limited to that one field.

Or not. I let out an audible sigh of relief when the machine dispensed my cash and returned my card. But it also gave me (and debited my account) $10 more than I requested. And although I selected "yes" when asked if I wanted a receipt, it didn't print one. So there were at least three things wrong with this ATM (the adjacent machine seemed to be working normally, so it wasn't a systemwide problem). Since there was an open bank branch next door, I decided to report the problem.

The assistant branch manager confidently informed me that the ATMs have been working fine, that there was no physical damage to it, and so I must have made a mistake. No, there was no need to investigate further; no one has complained before, and if I hadn't lost any money, what was I worried about? When I tried to show her the screen shot on my phone, she ended the conversation by pointing out that for security reasons, photography is not permitted in the bank (even though the ATM in question wasn't in the branch itself). It was like talking to a polite brick wall.

Such exchanges are maddeningly familiar in the security world, even when the stakes are far higher than they were here. Once invested in a complex technology, there's a natural tendency to defend it even when confronted with persuasive evidence that it isn't working properly. Banking systems can and do fail, but because the failures are relatively rare, we pretend that they never happen at all; see the excellent new edition of Ross Anderson's Security Engineering text for a litany of dismaying examples.

But knowing that doesn't make it any less frustrating when flaws are discovered and then ignored, whether in an ATM or a voting machine. Perhaps the bank manager could join me for a little game of Security Excuse Bingo [link].

Click the photo above for its Flickr page.

N.B.: Yes, the terminal in question was made by Diebold, and yes, their subsidiary, Premier Election Systems, has faced criticism for problems and vulnerabilities in its voting products. But that's not an entirely fair brush with which to paint this problem, since without knowing the details, it could just as easily have been caused entirely by the bank's software or configuration.

Readers of this blog may recall that in the Fall of 2005, my graduate students (Micah Sherr, Eric Cronin, and Sandy Clark) and I discovered that the telephone wiretap technology commonly used by law enforcement agencies can be misled or disabled altogether simply by sending various low-level audio signals on the target's line [link our full pdf paper here]. Fortunately, certain newer tapping systems, based on the 1994 CALEA regulations, have the potential to neutralize these vulnerabilities, depending on how they are configured. Shortly after we informed the FBI about our findings, an FBI spokesperson reassured the New York Times that the problem was now largely fixed and affects less than 10 percent of taps [link].

Readers of this blog may recall that in the Fall of 2005, my graduate students (Micah Sherr, Eric Cronin, and Sandy Clark) and I discovered that the telephone wiretap technology commonly used by law enforcement agencies can be misled or disabled altogether simply by sending various low-level audio signals on the target's line [link our full pdf paper here]. Fortunately, certain newer tapping systems, based on the 1994 CALEA regulations, have the potential to neutralize these vulnerabilities, depending on how they are configured. Shortly after we informed the FBI about our findings, an FBI spokesperson reassured the New York Times that the problem was now largely fixed and affects less than 10 percent of taps [link].

Newly-released data, however, suggest that the FBI's assessment may have been wildly optimistic. According to a March, 2008 Department of Justice audit on CALEA implementation [pdf], about 40 percent of telephone switches remained incompatible with CALEA at the end of 2005. But it may be even worse than that; it's possible that many of the other 60 percent are vulnerable, too. According to the DoJ report, the FBI is paying several telephone companies to retrofit their switches with a "dial-out" version of CALEA. But as we discovered when we did our wiretapping research, CALEA dial-out has backward compatibility features that can make it just as vulnerable as the previous systems. These features can sometimes be turned off, but it can be difficult to reliably do so. And there's nothing in the extensive testing section of the audit report to suggest that CALEA collection systems are even tested for this.

By itself, this could serve as an object lesson on the security risks of backward compatibility, a reminder that even relatively simple things like wiretapping systems are difficult to get right without extensive review. The small technical details matter a lot here, which is why we should always scrutinize -- carefully and publicly -- new surveillance proposals to ensure that they work as intended and don't create subtle risks of their own. (That point is a recurring theme here; see this post or this post, for example.).

But that's not the most notable thing about the DoJ audit report.

It turns out that there's sensitive text hidden in the PDF version of the report, which is prominently marked "REDACTED - FOR PUBLIC RELEASE" on each page. It seems that whoever tried to sanitize the public version of the document did so by pasting an opaque PDF layer atop the sensitive data in several of the figures (e.g., on page 9). This is widely known to be a completely ineffective redaction technique, since the extra layer can be removed easily with Adobe's own Acrobat software or by just cutting and pasting text. In this case, I discovered the hidden text by accident, while copying part of the document into an email message to one of my students. (Select the blanked-out subtitle line in this blog entry to see how easy it is.) Ironically, the Justice Department has suffered embarrassment for this exact mistake at least once before: two years ago, they filed a leaky pdf court document that exposed eight pages of confidential material [see link].

This time around, the leaked "sensitive" information seems entirely innocuous, and I'm hard pressed to understand the justification for withholding it in the first place (which is why I'm comfortable discussing it here). Some of the censored data concerns the FBI's financial arrangements with Verizon for CALEA retrofits of their wireline network (they paid $2,550 each to upgrade 1,140 older phone switches; now you know).

A bit more interesting was a redacted survey of federal and state law enforcement wiretapping problems. In 2006, more than a third of the agencies surveyed were tapping (or trying to tap) VoIP and broadband services. Also redacted was the fact that that law enforcement sees VoIP, broadband, and pre-paid cellular telephones as the three main threats to wiretapping (although the complexities of tapping disposable "burner" cellphones are hardly a secret to fans of TV police procedurals such as The Wire). Significantly, there's no mention of any problems with cryptography, the FBI's dire predictions to the contrary during the 1990s notwithstanding.

But don't take my word for it. A partially de-redacted version of the DoJ audit report can be found here [pdf]. (Or you can do it yourself from the original, archived here [pdf])

The NSA has a helpful guide to effective document sanitation [pdf]; perhaps someone should send a copy over to the Justice department. Until then, remind me not to become a confidential informant, lest my name show up in some badly redacted court filing.

Addendum, 16 May 2008, 9pm: Ryan Singel has a nice summary on Wired's Threat Level blog, although for some reason he accused me (now fixed) of being a professor at Princeton. (I'm actually at the University of Pennsylvania, although I suppose I could move to Princeton if I ever have to enter witness protection due to a redaction error).

Addendum, 16 May 2008, 11pm: The entire Office of the Inspector General's section of the DoJ's web site (where the report had been hosted) seems to have vanished this evening, with all of the pages returning 404 errors, presumably while someone checks for other improperly sanitized documents.

Addendum, 18 May 2008, 2am: The OIG web site is now back on the air, with a new PDF of the audit report. The removable opaque layers are still there, but the entries in the redacted tables have been replaced by the letter "x". So this barn door seems now to be closed.

Photo above: A law enforcement "loop extender" phone tap, which is vulnerable to simple countermeasures by the surveillance target. This one was made by Recall Technologies, photo by me.

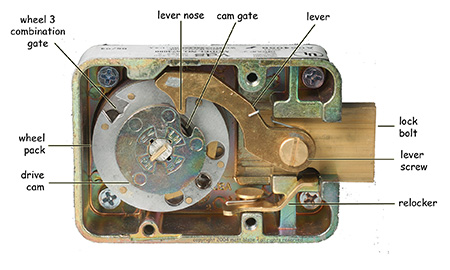

When I published Safecracking for the Computer Scientist [pdf] a few years ago,

I worried that I might be alone in harboring

a serious interest in the cryptologic aspects of physical security.

Yesterday I was delighted to discover that I had been wrong. It turns out that more than

ten years before I wrote up my safecracking survey, a detailed

analysis of the keyspaces of mechanical safe locks had already been

written, suggesting a simple and practical dictionary attack of which I was completely

unaware. But I have an excuse for my ignorance: the study was published

in secret, in Cryptologic Quarterly, a classified technical

journal of the US National Security Agency.

When I published Safecracking for the Computer Scientist [pdf] a few years ago,

I worried that I might be alone in harboring

a serious interest in the cryptologic aspects of physical security.

Yesterday I was delighted to discover that I had been wrong. It turns out that more than

ten years before I wrote up my safecracking survey, a detailed

analysis of the keyspaces of mechanical safe locks had already been

written, suggesting a simple and practical dictionary attack of which I was completely

unaware. But I have an excuse for my ignorance: the study was published

in secret, in Cryptologic Quarterly, a classified technical

journal of the US National Security Agency.

This month the NSA quietly declassified a number of internal technical and historical documents from the 1980's and 1990's (this latest batch includes more recent material than the previously-released 1970's papers I mentioned in last weekend's post here). Among the newly-released papers was Telephone Codes and Safe Combinations: A Deadly Duo [pdf] from Spring 1993, with the author names and more than half of the content still classified and redacted.

Reading between the redacted sections, it's not too hard to piece together the main ideas of the paper. First, they observe, as I had, that while a typical three wheel safe lock appears to allow 1,000,000 different combinations, mechanical imperfections coupled with narrow rules for selecting "good" combinations makes the effective usable keyspace smaller than this by more than an order of magnitude. They put the number at 38,720 for the locks used by the government (this is within the range of 22,330 to 111,139 that I estimated in my Safecracking paper).

38,720 is a lot less than 1,000,000, but it probably still leaves safes outside the reach of manual exhaustive search. However, as the anonymous* [see note below] NSA authors point out, an attacker may be able to do considerably better than this by exploiting the mnemonic devices users sometimes employ when selecting combinations. Random numeric combinations are hard to remember. So user-selected combinations are often less than completely random. In particular (or at least I infer from the title and some speculation about the redacted sections), many safe users take advantage of the standard telephone keypad encoding (e.g., A, B, and C encode to "2", and so on) to derive their numeric combinations from (more easily remembered) six letter words. The word "SECRET" would thus correspond to a combination of 73-27-38.

This "clever" key selection scheme, of course, greatly aids the attacker, reducing the keyspace of probable combinations (after "bad" combinations are removed) to only a few hundred, even when a large dictionary is used. The paper further pruned the keyspaces for targets of varying vocabularies, proposing a "stooge" list of 176 common words, a "dimwit" list of 166 slightly less common words, and a "savant" list of 244 more esoteric words. It would take less than two hours to try all of these combinations by hand, and less than two minutes with the aid of a mechanical autodialer; on average, we'd expect to succeed in half that time.

Dictionary attacks aren't new, of course, but I've never heard of them being applied to safes before (outside of the folk wisdom to "try birthdays", etc). Certainly the net result is impressive -- the technique is much more efficient than exhaustive search, and it works even against "manipulation resistant" locks. Particularly notable is how two only moderately misguided ideas -- the use of words as key material plus overly restrictive (and yet widely followed) rules for selecting "good" combinations -- combine to create a single terrible idea that cedes enormous advantage to the attacker.

Although the paper is heavily censored, I was able to reconstruct most of the missing details without much difficulty. In particular, I spent an hour with an online dictionary and some simple scripts to create a list of 1757 likely six-digit word-based combinations, which is available at www.mattblaze.org/papers/cc-5.txt . (Addendum: A shorter list of 518 combinations based on the more restrictive combination selection guidelines apparently used by NSA can be found at www.mattblaze.org/papers/cc-15.txt ). If you have a safe, you might want to check to see if your combination is listed.

As gratifying as it was to discover kindred sprits in the classified world, I found the recently released papers especially interesting for what they reveal about the NSA's research culture. The papers reflect curiosity and intellect not just within the relatively narrow crafts of cryptology and SIGINT, but across the broader context in which they operate. There's a wry humor throughout many of the documents, much like what I remember pervading the old Bell Labs (the authors of the safe paper, for example, propose that they be given a cash award for efficiency gains arising from their discovery of an optimal safe combination (52-22-37), which they suggest everyone in the government adopt).

It must be a fun place to work.

Addendum (7-May-2008): Several people asked what makes the combinations proposed in the appendix of the NSA paper "optimal". The paper identifies 46-16-31 as the legal combination with the shortest total dialing distance, requiring moving the dial a total of 376 graduations. (Their calculation starts after the entry of the first number and includes returning the dial to zero for opening.) However, this appears to assume an unusual requirement that individual numbers be at least fifteen graduations apart from one another, which is more restrictive than the five (or sometimes ten) graduation minimum recommended by lock vendors. Apparently the official (but redacted) NSA rules for safe combinations are more restrictive than mechanically necessary. If so, the NSA has been using safe combinations selected from a much smaller than needed keyspace. In any case, under the NSA rule the fastest legal word-based combination indeed seems to be 52-22-37, which is derived from the word "JABBER", although the word itself was, inexplicably, redacted from the released paper. However, I found an even faster word-based combination that's legal under more conventional rules: 37-22-27, derived from the word "FRACAS". It requires a total dial movement of only 347 graduations.

* Well, somewhat anonymous. Although the authors' names are redacted in the released document, the paper's position in a previously released and partially redacted alphabetical index [pdf] suggests that one of their names lies between Campaigne and Farley and the other's between Vanderpool and Wiley.

Computer security depends ultimately on the security of the computer -- it's an indisputable tautology so self-evident that it seems almost insulting to point it out. Yet what may be obvious in the abstract is sometimes dangerously under-appreciated in practice. Security people come predominantly from software-centric backgrounds and we're often predisposed to relentlessly scrutinize the things we understand best while quietly assuming away everything else. But attackers, sadly, are under no obligation to play to our analytical preferences. Several recent research results make an eloquent and persuasive case that a much broader view of security is needed. A bit of simple hardware trickery, we're now reminded, can subvert a system right out from under even the most carefully vetted and protected software.

Earlier this year, Princeton graduate student Alex Halderman and seven of his colleagues discovered practical techniques for extracting the contents of DRAM memory, including cryptographic keys, after a computer has been turned off [link]. This means, among other worries, that if someone -- be it a casual thief or a foreign intelligence agent -- snatches your laptop, the fact that it had been "safely" powered down may be insufficient to protect your passwords and disk encryption keys. And the techniques are simple and non-destructive, involving little more than access to the memory chips and some canned-air coolant.

If that's not enough, this month at the USENIX workshop on Large-Scale Exploits and Emergent Threats, Samuel T. King and five colleagues at the University of Illinois Urbana-Champaign described a remarkably efficient approach to adding hardware backdoors to general-purpose computers [pdf]. Slipping a small amount of malicious circuitry (just a few thousand extra gates) into a CPU's design is sufficient to enable a wide range of remote attacks that can't be detected or prevented by conventional software security approaches. To carry out such attacks requires the ability to subvert computers wholesale as they are built or assembled. "Supply chain" threats thus operate on a different scale than we're used to thinking about -- more in the realm of governments and organized crime than lone malfeasants -- but the potential effects can be absolutely devastating under the right conditions.

And don't forget about your keyboard. At USENIX Security 2006, Gaurav Shah, Andres Molina and I introduced JitterBug attacks [pdf], in which a subverted keyboard can leak captured passwords and other secrets over the network through a completely uncompromised host computer, via simple covert timing channel. The usual countermeasures against keyboard sniffers -- virus scanners, network encryption, and so on -- don't help here. JitterBug attacks are potentially viable not only at the retail level (via a surreptitious "bump" in the keyboard cable), but also wholesale (by subverting the supply chain or through viruses that modify keyboard firmware).

Should you actually be worried? These attacks probably don't threaten all, or even most, users, but the risk that they might be carried out against any particular target isn't really the main concern here. The larger issue is that they succeed by undermining our assumptions about what we can trust. They work because our attention is focused elsewhere.

An encouraging (if rare) sign of progress in Internet security over the last decade has been the collective realization that even when we can't figure out how to exploit a vulnerability, someone else eventually might. Thankfully, it's now become (mostly) accepted practice to fix newly discovered software weaknesses even if we haven't yet worked out all the details of how to use them to conduct attacks. We know from hard experience that when "theoretical" vulnerabilities are left open long enough, the bad guys eventually figure out how to make them all too practical.

But there's a different attitude, for whatever reason, toward the underlying hardware. Even the most paranoid among us often take the integrity of computer hardware as an article of faith, something not to be seriously questioned. A common response to hardware security research is bemused puzzlement at how us overpaid academics waste our time on such impractical nonsense when there are so many real problems we could be solving instead. (Search the comments on Slashdot and other message boards about the papers cited above for examples of this reaction.)

Part of the disconnect may be because hardware threats are so unpleasant to think about from the software perspective; there doesn't seem to be much we can do about them except to hope that they won't affect us. Until, of course, they eventually do.

Reluctance to acknowledge new kinds of threats is nothing new, and it isn't limited to causal users. Even the military is susceptible. For example, Tempest attacks against cryptographic processors, in which sensitive data radiates out via spurious RF and other emanations, were first discovered by Bell Labs engineers in 1943. They noticed signals that correlated with the plain text of encrypted teletype messages coming from a certain piece of crypto hardware. According to a recently declassified NSA paper on Tempest [pdf]:

Bell Telephone faced a dilemma. They had sold the equipment to the military with the assurance that it was secure, but it wasn't. The only thing they could do was to tell the Signal Corps about it, which they did. There they met the charter members of a club of skeptics who could not believe that these tiny pips could really be exploited under practical field conditions. They are alleged to have said something like: "Don't you realize there's a war on? We can't bring our cryptographic operations to a screeching halt based on a dubious and esoteric laboratory phenomenon. If this is really dangerous, prove it."Some things never change. Fortunately, the Bell Labs engineers quickly set up a persuasive demonstration, and so the military was ultimately convinced to develop countermeasures. That time, they were able to work out the details before the enemy did.

Thanks to Steve Bellovin for pointing me to www.nsa.gov/public/crypt_spectrum.cfm, a small trove of declassified NSA technical papers from the 1970's and 1980's, all of which are well worth reading. And no discussion of underlying security would be complete without a reference to Ken Thompson's famous Turing award lecture, Reflections on Trusting Trust.

I was part of a panel on warrantless wiretapping at last week's RSA Conference in San Francisco. The keynote session, which was moderated by the NY Times' Eric Lichtblau and which included former NSA official Bill Crowell, CDT's Jim Dempsey, attorney David Rivken, and me, focused on the role of the judiciary in national security wiretaps. Ryan Singel covered the proceedings in a brief piece on Wired's Threat Level blog.

There's a tendency to view warrantless wiretaps in strictly legal or political terms and to assume that the interception technology will correctly implement whatever the policy is supposed to be. But the reality isn't so simple. I found myself the sole techie on the RSA panel, so my role was largely to to point out that this is as much an issue of engineering as it is legal oversight. And while we don't know all the details about how NSA's wiretaps are being carried out in the US, what we do know suggests some disturbing architectural choices that make the program especially vulnerable to over-collection and abuse. In particular, assuming Mark Klein's AT&T documents are accurate, the NSA infrastructure seems much farther inside the US telecom infrastructure than would be appropriate for intercepting the exclusively international traffic that the government says it wants. The taps are apparently in domestic backbone switches rather than, say, in cable heads that leave the country, where international traffic is most concentrated (and segregated). Compounding the inherent risks of this odd design is the fact that the equipment that pans for nuggets of international communication in the stream of (off-limits) domestic traffic is apparently made up entirely of hardware provided and configured by the government, rather than the carriers. It's essentially equivalent to giving the NSA the keys to the phone company central office and hoping that they figure out which wires are the right ones to tap.

Technical details matter here. A recent paper I published with some colleagues goes into more depth about some of these issues; see a previous blog entry (linked here) about our paper.

The RSA conference itself is quite the extravaganza of commerce and technology, about as far as I care to venture from the lower-budget academic and research gatherings that comprise my natural habitat. There were something like 18,000 attendees at RSA last week, many willingly paying upwards of $2000 for the privilege. The scale is just staggeringly huge, with a level of overproduction and polish more typically associated with a Hollywood movie, or maybe a Rolling Stones concert. Some of the speakers had private dressing rooms backstage. (I didn't have one to myself, but they did lay out a nice cheese spread for us in the green room.) They made us stop at the makeup trailer before they let us on stage (I'm mildly allergic, it turns out).

If there's a silver lining under all this bling it's that digital security is unquestionably considered serious business these days. Now we just have to figure out the technical part.

I'm delighted to report that USENIX, probably the most important technical society at which I publish (and on whose board I serve), has taken a long-overdue lead toward openly disseminating scientific research. Effective immediately, all USENIX proceedings and papers will be freely available on the USENIX web site as soon as they are published [link]. (Previously, most of the organization's proceedings required a member login for access for the first year after their publication.)

For years, many authors have made their papers available on their own web sites, but the practice is haphazard, non-archivial, and, remarkably, actively discouraged by the restrictive copyright policies of many journals and conferences. So USENIX's step is important both substantively and symbolically. It reinforces why scientific papers are published in the first place: not as proprietary revenue sources, but to advance the state of the art for the benefit of society as a whole.

Unfortunately, other major technical societies that sponsor conferences and journals still cling to the antiquated notion, rooted in a rapidly-disappearing print-based publishing economy, that they naturally "own" the writings that volunteer authors, editors and reviewers produce. These organizations, which insist on copyright control as a condition of publication, argue that the sale of conference proceedings and journal subscriptions provides an essential revenue stream that subsidizes their other good works. But this income, however well it might be used, has evolved into an ill-gotten windfall. We write scientific papers first and last because we want them read. When papers were actually printed on paper it might have been reasonable to expect authors to donate the copyright in exchange for production and distribution. Today, of course, such a model seems, at best, quaintly out of touch with the needs of researchers and academics who can no longer tolerate the delay or expense of seeking out printed copies of far-flung documents they expect to find on the web.

Organizations devoted to computing research should recognize this not-so-new reality better than anyone. It's time for ACM and IEEE to follow USENIX's leadership in making scientific papers freely available to all comers. Please join me in urging them to do so.

In the month since its publication, Lawrence Wright's New Yorker profile of National Intelligence Director Mike McConnell has been widely picked apart for morsels of insight into the current administration's attitudes on the finer points of interrogation, wiretaps and privacy. I was just re-reading the piece, and, questions of waterboarding and torture aside, I was struck by this unchallenged retelling of the recent history of US cryptography regulation:

In the month since its publication, Lawrence Wright's New Yorker profile of National Intelligence Director Mike McConnell has been widely picked apart for morsels of insight into the current administration's attitudes on the finer points of interrogation, wiretaps and privacy. I was just re-reading the piece, and, questions of waterboarding and torture aside, I was struck by this unchallenged retelling of the recent history of US cryptography regulation:

In the nineties, new encryption software that could protect telephone conversations, faxes, and e-mails from unwarranted monitoring was coming on the market, but the programs could also block entirely legal efforts to eavesdrop on criminals or potential terrorists. Under McConnell's direction, the N.S.A. developed a sophisticated device, the Clipper Chip, with a superior ability to encrypt any electronic transmission; it also allowed law-enforcement officials, given the proper authority, to decipher and eavesdrop on the encrypted communications of others. Privacy advocates criticized the device, though, and the Clipper was abandoned by 1996. "They convinced the folks on the Hill that they couldn't trust the government to do what it said it was going to do," Richard Wilhelm, who was in charge of information warfare under McConnell, says.

But that's not actually what happened. In fact, almost everything about this quote -- the whos, the whys and the whens -- is not merely slanted in its interpretation, but factually contradicted by the public record.

First, the Clipper Chip itself was abandoned not because of concerns about privacy (although it certainly became a lightning rod for criticism on that front), but rather because it was found to have serious technical vulnerabilities that had escaped the NSA's internal review process and that rendered the system ineffective for its intended purpose. I discovered and published in the open literature the first of these flaws at the ACM CCS conference in 1994, about a year after Clipper's introduction. (Technically inclined readers can see Protocol Failure in the Escrowed Encryption Standard [pdf link] for details.)

More generally, while Congress (presumably the Hill on which the folks referred to above resided) held several hearings on the subject, it never actually enacted (or even voted on) any cryptography legislation during the 1990's. Instead, the relaxation of cryptography export regulations (the lever through which the government controlled US encryption software and hardware) was entirely a product of the executive branch. Department of Commerce export regulations that took effect at the beginning of 2000 effectively eliminated the major controls on mass-market and open source encryption products, and in 2004 the rules were relaxed even further. In other words, the current policy promoting widely available encryption has been actively supported not just by the previous administration, but by the present one as well.

Government attempts in the 1990's to control cryptography were ultimately doomed not by some kind of weak-kneed policy capitulation (even if some in the national security community might now find it convenient to portray it as such), but by inexorable advances in technology (particularly what Larry Lessig calls "west coast code"). Even before the hardware-only Clipper program was announced, the performance of software-based encryption on general purpose computers had become competitive with that of expensive purpose-built encryption chips. At the same time, growth in the largely open-source-based networked computing world increasingly depended on reliable and inexpensive communications security, something fundamentally incompatible with the key escrow scheme underlying Clipper. Coupled with the inherent risk that a central encryption key database would itself become a target for foreign intelligence agents, it became apparent to just about everyone by the end of the decade that decontrol was the only viable policy option.

Tellingly, even after the attacks of September 11th, 2001 the Bush administration made no serious attempt to control or otherwise reign in the public availability or use of strong encryption. Indeed, its only action on cryptography policy came in 2004, when it further liberalized the few remaining export controls on the technology.

It's unfortunate that the New Yorker failed to check the facts about Clipper, but that's not the central concern here. Intelligence agencies (and their officials) largely operate under a thick veil of legally enforced secrecy, carrying with it a special obligation to be scrupulously truthful in their analysis. Should we trust them to be more forthright than the former official quoted in the excerpt when the facts are not so easily checked? We often have no choice.

When I published my Clipper paper in 1994, the NSA -- to its great credit at the time -- immediately acknowledged the problems, despite the embarrassment the whole affair no doubt caused them. And that is precisely how we must expect our intelligence agencies to behave. Intelligence spun to serve a political narrative isn't intelligence, it's just politics.

Photo above: Mykotronx MYK-78T Clipper Chip, as installed in an AT&T TSD-3600E encrypting telephone. More Clipper photos here.

I'll be giving a talk tomorrow (Thursday 14 February) about the review of electronic voting systems we conducted last fall here at U. Penn. For those in the Philadelphia area, the talk is on the Penn Campus in the Levine Hall Auditorium from 3:00-4:15pm. Sorry for not getting this up on the blog earlier.

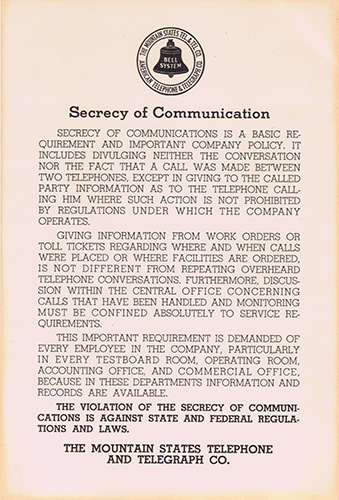

A recurring theme in this blog

over the last year

has been how the sweeping surveillance technology envisioned by

the 2007 US Protect America Act introduces fundamental technical

vulnerabilities into the nation's communications infrastructure.

These risks should worry law enforcement and the national security

community at least as much as they worry civil liberties advocates. A post last October mentioned an

analysis that I was writing with Steve Bellovin,

Whit Diffie, Susan Landau, Peter Neumann and Jennifer Rexford.

A recurring theme in this blog

over the last year

has been how the sweeping surveillance technology envisioned by

the 2007 US Protect America Act introduces fundamental technical

vulnerabilities into the nation's communications infrastructure.

These risks should worry law enforcement and the national security

community at least as much as they worry civil liberties advocates. A post last October mentioned an

analysis that I was writing with Steve Bellovin,

Whit Diffie, Susan Landau, Peter Neumann and Jennifer Rexford.

The final version of our paper, "Risking Communications Security: Potential Hazards of the Protect America Act," will be published in the January/February 2008 issue of IEEE Security and Privacy, which hits the stands in a few weeks. But you can download a preprint of our article today at https://www.mattblaze.org/papers/paa-ieee.pdf [PDF]. Remember, you saw it here first.

This week the Senate takes up whether to make the warrantless wiretapping act permanent, so the subject is extremely timely. The technical risks created by unsupervised wiretapping on this scale are enormously serious (and reason enough to urge Congress to let this misguided law expire). But safety and security may be subordinated in the debate to more entrenched interests. In particular, AT&T and other communications carriers have been lobbying hard for an amendment that gives them retroactive immunity from any liability they might have had for turning over Americans' communications and call records to the government, legally or not, before the act passed.

As someone who began his professional career in the Bell System (and who stayed around through several of its successors), the push for telco immunity represents an especially bitter disillusionment for me. Say what you will about the old Phone Company, but respect for customer privacy was once a deeply rooted point of pride in the corporate ethos. There was no faster way to be fired (or worse) than to snoop into call records or facilitate illegal wiretaps, well intentioned or not. And it was genuinely part of the culture; we believed in it, even those of us ordinarily disposed toward a skeptical view of the official company line. Now it all seems like just another bit of cynical, focus-group-tested PR.

Apologies to David Byrne for paraphrasing his hauntingly prescient lyrics. And thanks to Eric Cronin for discovering and scanning the now sadly nostalgic reminder reproduced at right.

I've dabbled with photography for most of my life, defiantly regressing toward bulkier equipment and more cumbersome technique as cameras become inexorably smaller and easier to use with each successive generation. Lately, I've settled on a large format camera with a tethered digital scanning back, which is about as unwieldy as I'd likely want to endure, at least without employing a pack mule.

But for all the effort I'm apparently willing to expend to take pictures, I'm pathologically negligent about actually doing anything with them once they've been taken. Scattered about my home and office are boxes of unprinted negatives and disk drives of unprocessed captures (this is, admittedly, overwhelmingly for the best). So it was somewhat against my natural inclinations that a few years ago I started making available an idiosyncratic (that is to say, random) selection of images elsewhere on this web site.

Publishing photos on my own server has been a mixed bag. It maximizes my control over the presentation, which appeals to the control freak in me, but it also creates lot of non-photographic work every time I want to add a new picture -- editing html, creating thumbnails, updating links and indexes. I've got generally ample bandwidth, but every now and then someone hotlinks into an image from some wildly popular place and everything slows to a crawl. I could build better tools and install better filters to fix most of these shortcomings, but, true to my own form I suppose, I haven't. So a few weeks ago I finally gave in to the temptations of convenience and opened an account on Flickr.

I'm slowly (but thus far, at least, steadily) populating my Flickr page with everything from casual snapshots to dubiously artistic abstractions. It's still an experiment for me, but a side effect has been to bring into focus (sorry) some of what's good and what's bad about how we publish on the web.

Flickr is a mixed bag, too, but it's different mix than doing everything on my own has been. It's certainly easier. And there's an appeal to being able easily link photos into communities of other photographers, leave comments, and attract a more diverse audience. So far at it seems not to have scaled up unmanageably or become hopelessly mired in robotic spam. They make it easy to publish under a Creative Commons license, which I use for most of my pictures. But there are also small annoyances. The thumbnail resizing algorithm sometimes adds too much contrast for my taste -- I'd welcome an option to re-scale certain images myself. The page layout is inflexible. There's no way to control who can view (and mine data from) your social network. And they get intellectual property wrong in small but important ways. For example, every published photo is prominently labeled "this photo is public", risking the impression that copyrighted and CC-licensed photos are actually in the public domain and free for any use without permission or attribution.

I wonder how most people feel about that; do we think of our own creative output, however humble, as being deserving of protection? Or do we tend to regard intellectual property as legitimately applying only to "serious" for-profit enterprises (with Hollywood and the music industry rightfully occupying rarefied positions at the top of the socio-legal hierarchy)? A couple of years ago I led a small discussion group for incoming students at my overpriced Ivy-league university. The topic was Larry Lessig's book Free Culture, and I started by asking what I thought was a softball question about who was exclusively a content consumer and who occasionally produced creative things deserving protection and recognition. To my surprise, everyone put themselves squarely in the former category. These were talented kids, mostly from privileged background -- hardly a group that tends to be shy about their own creativity. Maybe it was the setting or maybe it was how I asked. But perhaps their apparent humility was symptomatic of something deeply rooted in our current copyright mess. Until we can abandon this increasingly artificial distinction -- "consumers" and "content producers", us against them -- our lopsided copyright laws seem bound to continue on their current draconian trajectory, with public disrespect for the rules becoming correspondingly all the more flagrant.