This Monday, The Intercept broke the story of a leaked classified NSA report [pdf link] on an email-based attack on a various US election systems just before the 2016 US general election.

This Monday, The Intercept broke the story of a leaked classified NSA report [pdf link] on an email-based attack on a various US election systems just before the 2016 US general election.

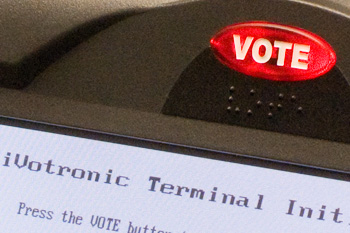

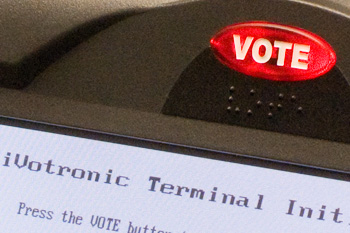

The NSA report, dated May 5, 2017, details what I would assume is only a small part of a more comprehensive investigation into Russian intelligence services' "cyber operations" to influence the US presidential race. The report analyzes several relatively small-scale targeted email operations that occurred in August and October of last year. One campaign used "spearphishing" techniques against employees of third-party election support vendors (which manage voter registration databases for county election offices). Another -- our focus here -- targeted 112 unidentified county election officials with "trojan horse" malware disguised inside plausibly innocuous-looking Microsoft Word attachments. The NSA report does not say whether these attacks were successful in compromising any county voting offices or what even what the malware actually tried to do.

Targeted phishing attacks and malware hidden in email attachments might not seem like the kind of high-tech spy tools we associate with sophisticated intelligence agencies like Russia's GRU. They're familiar annoyances to almost anyone with an email account. And yet they can serve as devastatingly effective entry points into even very sensitive systems and networks.

So what might an attacker -- particularly a state actor looking to disrupt an election -- accomplish with such low-tech attacks, should they have succeeded? Unfortunately, the possibilities are not comforting.

Encryption, it seems, at long last is winning. End-to-end encrypted communication systems are protecting more of our private communication than ever, making interception of sensitive content as it travels over (insecure) networks like the Internet less of a threat than it once was. All this is good news, unless you're in the business of intercepting sensitive content over networks. Denied access to network traffic, criminals and spies (whether on our side or theirs) will resort to other approaches to get access to data they seek. In practice, that often means exploiting security vulnerabilities in their targets' phones and computers to install surreptitious "spyware" that records conversations and text messages before they can be encrypted. In other words, wiretapping today increasingly involves hacking.

This, as you might imagine, is not without controversy.

Recent news stories, notably this story in USA Today and this story in the Washington Post, have brought to light extensive use of "Stingray" devices and "tower dumps" by federal -- and local -- law enforcement agencies to track cellular telephones.

Recent news stories, notably this story in USA Today and this story in the Washington Post, have brought to light extensive use of "Stingray" devices and "tower dumps" by federal -- and local -- law enforcement agencies to track cellular telephones.

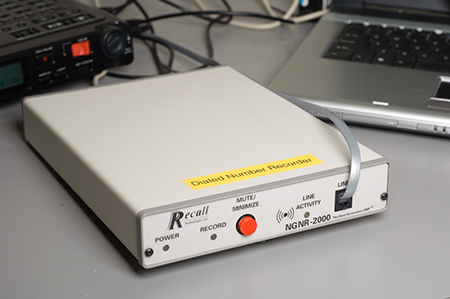

Just how how does all this tracking and interception technology work? There are actually a surprising number of different ways law enforcement agencies can track and get information about phones, each of which exposes different information in different ways. And it's all steeped in arcane surveillance jargon that's evolved over decades of changes in the law and the technology. So now seems like a good time to summarize what the various phone tapping methods actually are, how they work, and how they differ from one another.

Note that this post is concerned specifically with phone tracking as done by US domestic law enforcement agencies. Intelligence agencies engaged in bulk surveillance, such as the NSA, have different requirements, constraints, and resources, and generally use different techniques. For example, it was recently revealed that NSA has access to international phone "roaming" databases used by phone companies to route calls. The NSA apparently collects vast amounts of telephone "metadata" to discover hidden communications patterns, relationships, and behaviors across the world. There's also evidence of some data sharing to law enforcement from the intelligence side (see, for example, the DEA's "Hemisphere" program). But, as interesting and important as that is, it has little to do with the "retail" phone tracking techniques used by local law enforcement, and it's not our focus here.

Phone tracking by law enforcement agencies, in contrast to intelligence agencies, is intended to support investigations of specific crimes and to gather evidence for use in prosecutions. And so their interception technology -- and the underlying law -- is supposed to be focused on obtaining information about the communications of particular targets rather than of the population at large.

In all, there are six major distinct phone tracking and tapping methods used by investigators in the US: "call detail records requests", "pen register/trap and trace", "content wiretaps", "E911 pings", "tower dumps", and "Stingray/IMSI Catchers". Each reveals somewhat different information at different times, and each has its own legal implications. An agency might use any or all of them over the course of a given investigation. Let's take them one by one.

New Jersey was hit hard by Hurricane Sandy, and many parts of the state still lack electricity and basic infrastructure. Countless residents have been displaced, at least temporarily. And election day is on Tuesday.

New Jersey was hit hard by Hurricane Sandy, and many parts of the state still lack electricity and basic infrastructure. Countless residents have been displaced, at least temporarily. And election day is on Tuesday.

There can be little doubt that many New Jerseyans, whether newly displaced or rendered homebound, who had originally intended to cast their votes at their normal neighborhood polling stations will be unable to do so next week. Unless some new flexible voting options are made available, many people will be disenfranchised, perhaps altering the outcome of races. There are compelling reasons for New Jersey officials to act quickly to create viable, flexible, secure and reliable voting options for their citizens in this emergency.

A few hours ago, Gov. Christie announced that voters unable to reach their normal polling places would be permitted to vote by electronic mail. The directive, outlined here [pdf], allows displaced registered voters to request a "mail in" ballot from their local county clerk by email. The voter can then return the ballot, along with a signed "waiver of secrecy" form, by email, to be counted as a regular ballot. (The process is based on one used for overseas and military voters, but on a larger scale and with a greatly accelerated timeframe.)

Does email voting make sense for New Jersey during this emergency? It's hard to say one way or the other without a lot more information than has been released so far about how the system will work and how it will be secured.

A recent NY Times piece, on the response to a "credible, specific and unconfirmed" threat of a terrorist plot against New York on the tenth anniversary of the September 11 attacks, includes this strikingly telling quote from an anonymous senior law enforcement official:

"It's 9/11, baby," one official said. "We have to have something to get spun up about."

Indeed. But while it's easy to understand this remark as a bitingly candid assessment of the cynical and now reflexive fear mongering that we have allowed to become the most lasting and damaging legacy of Al Qaeda's mad war, I must also admit that there's another, equally true but much sadder, interpretation, at least for me.

We have to get spun up about something because the alternative is simply too painful. I can find essentially two viable emotional choices for tomorrow. One is to get ourselves "spun up" about a new threat, worry, take action, defend the homeland and otherwise occupy ourselves with the here and now. The other is quieter and simpler but far less palatable: to privately revisit the unspeakable horrors of that awful, awful, day, dislodging shallowly buried memories that emerge all too easily ten years later.

The relentless retrospective news coverage that (inevitably) is accompanying the upcoming anniversary has more than anything else reactivated the fading sense of overwhelming, escalating sadness I felt ten years ago. Sadness was ultimately the only available response, even for New Yorkers like me who lived only a few miles from the towers. It was in many ways the city's proudest moment, everyone wanting and trying to help, very little panic. But really, there wasn't nearly enough for all of us to do. Countless first responders and construction workers rushed without a thought to ground zero for a rescue that quickly became a recovery operation. Medical personnel reported to emergency rooms to treat wounded survivors who largely didn't exist. You couldn't even donate blood, the supply of volunteers overwhelming the small demand. (Working for AT&T at the time, I went to down to a midtown Manhattan switching office, hoping somehow to be able to help keep our phones working with most of the staff unable to get to work, but it was quickly clear I was only getting in the way of the people there who actually knew how do useful work.)

All most of us could really do that day and in the days that followed was bear witness to the horror of senseless death and try to comprehend the enormity of what was lost. Last words to loved ones, captured in voicemails from those who understood enough about what was happening to know that they would never see their families again. The impossible choice made by so many to jump rather than burn to death. The ubiquitous memorials to the dead, plastered in photocopied posters on walls everywhere around the city, created initially as desperate pleas for information on the missing.

Rudy Giuliani, a New York mayor for whom I normally have little patience, found a deep truth that afternoon when he was asked how many were lost. He didn't know, he said, but he cautioned that it would be "more than any of us can bear".

I remember trying to get angry at the bastards who inflicted this on us, but it didn't really work. Whoever they were, I knew they must be, in the end, simply crazy, beyond the reach of any meaningful kind of retribution. Anger couldn't displace the helplessness and sadness.

Remember all this or get "spun up"? Easy, easy choice.

Everything else aside, the recent Wikileaks/Guardian fiasco (in which the passphrase for a widely-distributed encrypted file containing an un-redacted database of Wikileaks cables ended up published in a book by a Guardian editor) nicely demonstrates an important cryptologic principle: the security properties of keys used for authentication and those used for decryption are quite different.

Authentication keys, such as login passwords, become effectively useless once they are changed (unless they are re-used in other contexts). An attacker who learns an old authentication key would have to travel back in time to make any use of it. But old decryption keys, even after they have been changed, can remain as valuable as the secrets they once protected, forever. Old ciphertext can still be decrypted with the old keys, even if newer ciphertext can't.

And it appears that confusion between these two concepts is at the root of the leak here. Assuming the Guardian editor's narrative accurately describes his understanding of what was going on, he believed that the passphrase he had been given was a temporary password that would have already been rendered useless by the time his book would be published. But that's not what it was at all; it was a decryption key -- for a file whose ciphertext was widely available.

It might be tempting for us, as cryptographers and security engineers, to snicker at both Wikileaks and the Guardian for the sloppy practices that allowed this high-stakes mishap to have happened in the first place. But we should also observe that confusion between the semantics of authentication and of confidentiality happens because these are, in fact, subtle concepts that are as poorly understood as they are intertwined, even among those who might now be laughing the hardest. The crypto literature is full of examples of protocol failures that have exactly this confusion at their root.

And it should also remind us that, again, cryptographic usability matters. Sometimes quite a bit.

Last week at the 20th

Usenix Security Symposium, Sandy Clark, Travis Goodspeed, Perry Metzger,

Zachary Wasserman, Kevin Xu, and I presented our paper

Why (Special Agent) Johnny

(Still) Can't Encrypt: A Security Analysis of the APCO Project 25 Two-Way Radio System [pdf]. I'm delighted and honored to report that we won an "Outstanding Paper" award.

Last week at the 20th

Usenix Security Symposium, Sandy Clark, Travis Goodspeed, Perry Metzger,

Zachary Wasserman, Kevin Xu, and I presented our paper

Why (Special Agent) Johnny

(Still) Can't Encrypt: A Security Analysis of the APCO Project 25 Two-Way Radio System [pdf]. I'm delighted and honored to report that we won an "Outstanding Paper" award.

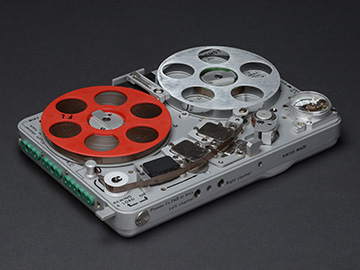

APCO Project 25 ("P25") is a suite of wireless communications protocols designed for government two-way (voice) radio systems, used for everything from dispatching police and other first responders by local government to coordinating federal tactical surveillance operations against organized crime and suspected terrorists. P25 is intended to be a "drop-in" digital replacement for the analog FM systems traditionally used in public safety two-way radio, adding some additional features and security options. It use the same frequency bands and channel allocations as the older analog systems it replaces, but with a digital modulation format and various higher-level application protocols (the most important being real-time voice broadcast). Although many agencies still use analog radio, P25 adoption has accelerated in recent years, especially among federal agencies.

One of the advantages of digital radio, and one of the design goals of P25, is the relative ease with which it can encrypt sensitive, confidential voice traffic with strong cryptographic algorithms and protocols. While most public safety two-way radio users (local police dispatch centers and so on) typically don't use (or need) encryption, for others -- those engaged in surveillance of organized crime, counter espionage and executive protection, to name a few -- it has become an essential requirement. When all radio transmissions were in the clear -- and vulnerable to interception -- these "tactical" users needed to be constantly mindful of the threat of eavesdropping by an adversary, and so were forced to be stiltedly circumspect in what they could say over the air. For these users, strong, reliable encryption not only makes their operations more secure, it frees them to communicate more effectively.

So how secure is P25? Unfortunately, the news isn't very reassuring.

The 2010 U.S. Wiretap Report was released a couple

of weeks ago, the latest in a series of puzzles published annually, on

and off, by congressional mandate since the Nixon administration.

The report, as

its name implies, summarizes legal wiretapping by federal and state law enforcement agencies. The reports are puzzles because they are notoriously

incomplete; the data relies on spotty reporting, and information

on "national security" (FISA) taps is excluded altogether. Still, it's

the most complete public picture of wiretapping as practiced in the US that we

have, and as such, is of likely interest to many readers here.

The 2010 U.S. Wiretap Report was released a couple

of weeks ago, the latest in a series of puzzles published annually, on

and off, by congressional mandate since the Nixon administration.

The report, as

its name implies, summarizes legal wiretapping by federal and state law enforcement agencies. The reports are puzzles because they are notoriously

incomplete; the data relies on spotty reporting, and information

on "national security" (FISA) taps is excluded altogether. Still, it's

the most complete public picture of wiretapping as practiced in the US that we

have, and as such, is of likely interest to many readers here.

We now know that there were at least 3194 criminal wiretaps last year (1207 of these were by federal law enforcement and 1987 were done by state and local agencies). The previous year there were only 2376 reported, but it isn't clear how much of this increase was due to improved data collection in 2010. Again, this is only "Title III" content wiretaps for criminal investigations (mostly drug cases); it doesn't include "pen registers" that record call details without audio or taps for counterintelligence and counterterrorism investigations, which presumably have accounted for an increasing proportion of intercepts since 2001. And there's apparently still a fair bit of underreporting in the statistics. So we don't really know how much wiretapping the government actually does in total or what the trends really look like. There's a lot of noise among the signals here.

But for all the noise, one interesting fact stands out rather clearly. Despite dire predictions to the contrary, the open availability of cryptography has done little to hinder law enforcement's ability to conduct investigations.

A while back when I tried to sign up for a Facebook account it was almost indistinguishable from a phishing attack -- it kept urging me to give them my email and other passwords to "help" me keep in better contact with my friends. (I ended up giving up, but apparently not completely enough to prevent an endless stream of "friend" requests from showing up in my mailbox.)

Signing up for Google+ this week was different. It already knew who all my contacts were, no passwords required.

I'm not sure, in retrospect, which was more disconcerting. If FB signup raised my phishing defenses, joining G+ felt more like a cyber-Mafia shakedown. All that was missing from the exhaustive list of friends and loved ones was "... it would be a shame if something happened to these people."

I'd say to look for me there, but it seems you won't have to.

I'll be talking about computer security and cyberwar this morning live at 10am on WHYY-FM's otherwise excellent Radio Times show. For those who aren't up before the crack of noon, I'm told the show will also be repeated at 10pm as well as podcast online. (WHYY is the Philadelphia NPR affiliate).