New Jersey was hit hard by Hurricane Sandy, and many parts of the state still lack electricity and basic infrastructure. Countless residents have been displaced, at least temporarily. And election day is on Tuesday.

New Jersey was hit hard by Hurricane Sandy, and many parts of the state still lack electricity and basic infrastructure. Countless residents have been displaced, at least temporarily. And election day is on Tuesday.

There can be little doubt that many New Jerseyans, whether newly displaced or rendered homebound, who had originally intended to cast their votes at their normal neighborhood polling stations will be unable to do so next week. Unless some new flexible voting options are made available, many people will be disenfranchised, perhaps altering the outcome of races. There are compelling reasons for New Jersey officials to act quickly to create viable, flexible, secure and reliable voting options for their citizens in this emergency.

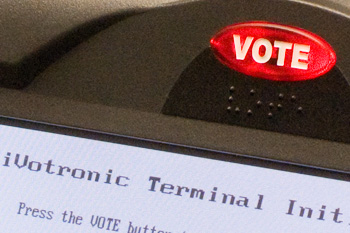

A few hours ago, Gov. Christie announced that voters unable to reach their normal polling places would be permitted to vote by electronic mail. The directive, outlined here [pdf], allows displaced registered voters to request a "mail in" ballot from their local county clerk by email. The voter can then return the ballot, along with a signed "waiver of secrecy" form, by email, to be counted as a regular ballot. (The process is based on one used for overseas and military voters, but on a larger scale and with a greatly accelerated timeframe.)

Does email voting make sense for New Jersey during this emergency? It's hard to say one way or the other without a lot more information than has been released so far about how the system will work and how it will be secured.

A recent NY Times piece, on the response to a "credible, specific and unconfirmed" threat of a terrorist plot against New York on the tenth anniversary of the September 11 attacks, includes this strikingly telling quote from an anonymous senior law enforcement official:

"It's 9/11, baby," one official said. "We have to have something to get spun up about."

Indeed. But while it's easy to understand this remark as a bitingly candid assessment of the cynical and now reflexive fear mongering that we have allowed to become the most lasting and damaging legacy of Al Qaeda's mad war, I must also admit that there's another, equally true but much sadder, interpretation, at least for me.

We have to get spun up about something because the alternative is simply too painful. I can find essentially two viable emotional choices for tomorrow. One is to get ourselves "spun up" about a new threat, worry, take action, defend the homeland and otherwise occupy ourselves with the here and now. The other is quieter and simpler but far less palatable: to privately revisit the unspeakable horrors of that awful, awful, day, dislodging shallowly buried memories that emerge all too easily ten years later.

The relentless retrospective news coverage that (inevitably) is accompanying the upcoming anniversary has more than anything else reactivated the fading sense of overwhelming, escalating sadness I felt ten years ago. Sadness was ultimately the only available response, even for New Yorkers like me who lived only a few miles from the towers. It was in many ways the city's proudest moment, everyone wanting and trying to help, very little panic. But really, there wasn't nearly enough for all of us to do. Countless first responders and construction workers rushed without a thought to ground zero for a rescue that quickly became a recovery operation. Medical personnel reported to emergency rooms to treat wounded survivors who largely didn't exist. You couldn't even donate blood, the supply of volunteers overwhelming the small demand. (Working for AT&T at the time, I went to down to a midtown Manhattan switching office, hoping somehow to be able to help keep our phones working with most of the staff unable to get to work, but it was quickly clear I was only getting in the way of the people there who actually knew how do useful work.)

All most of us could really do that day and in the days that followed was bear witness to the horror of senseless death and try to comprehend the enormity of what was lost. Last words to loved ones, captured in voicemails from those who understood enough about what was happening to know that they would never see their families again. The impossible choice made by so many to jump rather than burn to death. The ubiquitous memorials to the dead, plastered in photocopied posters on walls everywhere around the city, created initially as desperate pleas for information on the missing.

Rudy Giuliani, a New York mayor for whom I normally have little patience, found a deep truth that afternoon when he was asked how many were lost. He didn't know, he said, but he cautioned that it would be "more than any of us can bear".

I remember trying to get angry at the bastards who inflicted this on us, but it didn't really work. Whoever they were, I knew they must be, in the end, simply crazy, beyond the reach of any meaningful kind of retribution. Anger couldn't displace the helplessness and sadness.

Remember all this or get "spun up"? Easy, easy choice.

Everything else aside, the recent Wikileaks/Guardian fiasco (in which the passphrase for a widely-distributed encrypted file containing an un-redacted database of Wikileaks cables ended up published in a book by a Guardian editor) nicely demonstrates an important cryptologic principle: the security properties of keys used for authentication and those used for decryption are quite different.

Authentication keys, such as login passwords, become effectively useless once they are changed (unless they are re-used in other contexts). An attacker who learns an old authentication key would have to travel back in time to make any use of it. But old decryption keys, even after they have been changed, can remain as valuable as the secrets they once protected, forever. Old ciphertext can still be decrypted with the old keys, even if newer ciphertext can't.

And it appears that confusion between these two concepts is at the root of the leak here. Assuming the Guardian editor's narrative accurately describes his understanding of what was going on, he believed that the passphrase he had been given was a temporary password that would have already been rendered useless by the time his book would be published. But that's not what it was at all; it was a decryption key -- for a file whose ciphertext was widely available.

It might be tempting for us, as cryptographers and security engineers, to snicker at both Wikileaks and the Guardian for the sloppy practices that allowed this high-stakes mishap to have happened in the first place. But we should also observe that confusion between the semantics of authentication and of confidentiality happens because these are, in fact, subtle concepts that are as poorly understood as they are intertwined, even among those who might now be laughing the hardest. The crypto literature is full of examples of protocol failures that have exactly this confusion at their root.

And it should also remind us that, again, cryptographic usability matters. Sometimes quite a bit.

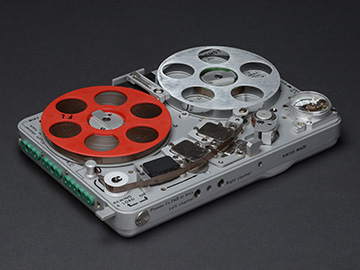

Last week at the 20th

Usenix Security Symposium, Sandy Clark, Travis Goodspeed, Perry Metzger,

Zachary Wasserman, Kevin Xu, and I presented our paper

Why (Special Agent) Johnny

(Still) Can't Encrypt: A Security Analysis of the APCO Project 25 Two-Way Radio System [pdf]. I'm delighted and honored to report that we won an "Outstanding Paper" award.

Last week at the 20th

Usenix Security Symposium, Sandy Clark, Travis Goodspeed, Perry Metzger,

Zachary Wasserman, Kevin Xu, and I presented our paper

Why (Special Agent) Johnny

(Still) Can't Encrypt: A Security Analysis of the APCO Project 25 Two-Way Radio System [pdf]. I'm delighted and honored to report that we won an "Outstanding Paper" award.

APCO Project 25 ("P25") is a suite of wireless communications protocols designed for government two-way (voice) radio systems, used for everything from dispatching police and other first responders by local government to coordinating federal tactical surveillance operations against organized crime and suspected terrorists. P25 is intended to be a "drop-in" digital replacement for the analog FM systems traditionally used in public safety two-way radio, adding some additional features and security options. It use the same frequency bands and channel allocations as the older analog systems it replaces, but with a digital modulation format and various higher-level application protocols (the most important being real-time voice broadcast). Although many agencies still use analog radio, P25 adoption has accelerated in recent years, especially among federal agencies.

One of the advantages of digital radio, and one of the design goals of P25, is the relative ease with which it can encrypt sensitive, confidential voice traffic with strong cryptographic algorithms and protocols. While most public safety two-way radio users (local police dispatch centers and so on) typically don't use (or need) encryption, for others -- those engaged in surveillance of organized crime, counter espionage and executive protection, to name a few -- it has become an essential requirement. When all radio transmissions were in the clear -- and vulnerable to interception -- these "tactical" users needed to be constantly mindful of the threat of eavesdropping by an adversary, and so were forced to be stiltedly circumspect in what they could say over the air. For these users, strong, reliable encryption not only makes their operations more secure, it frees them to communicate more effectively.

So how secure is P25? Unfortunately, the news isn't very reassuring.

The 2010 U.S. Wiretap Report was released a couple

of weeks ago, the latest in a series of puzzles published annually, on

and off, by congressional mandate since the Nixon administration.

The report, as

its name implies, summarizes legal wiretapping by federal and state law enforcement agencies. The reports are puzzles because they are notoriously

incomplete; the data relies on spotty reporting, and information

on "national security" (FISA) taps is excluded altogether. Still, it's

the most complete public picture of wiretapping as practiced in the US that we

have, and as such, is of likely interest to many readers here.

The 2010 U.S. Wiretap Report was released a couple

of weeks ago, the latest in a series of puzzles published annually, on

and off, by congressional mandate since the Nixon administration.

The report, as

its name implies, summarizes legal wiretapping by federal and state law enforcement agencies. The reports are puzzles because they are notoriously

incomplete; the data relies on spotty reporting, and information

on "national security" (FISA) taps is excluded altogether. Still, it's

the most complete public picture of wiretapping as practiced in the US that we

have, and as such, is of likely interest to many readers here.

We now know that there were at least 3194 criminal wiretaps last year (1207 of these were by federal law enforcement and 1987 were done by state and local agencies). The previous year there were only 2376 reported, but it isn't clear how much of this increase was due to improved data collection in 2010. Again, this is only "Title III" content wiretaps for criminal investigations (mostly drug cases); it doesn't include "pen registers" that record call details without audio or taps for counterintelligence and counterterrorism investigations, which presumably have accounted for an increasing proportion of intercepts since 2001. And there's apparently still a fair bit of underreporting in the statistics. So we don't really know how much wiretapping the government actually does in total or what the trends really look like. There's a lot of noise among the signals here.

But for all the noise, one interesting fact stands out rather clearly. Despite dire predictions to the contrary, the open availability of cryptography has done little to hinder law enforcement's ability to conduct investigations.

A while back when I tried to sign up for a Facebook account it was almost indistinguishable from a phishing attack -- it kept urging me to give them my email and other passwords to "help" me keep in better contact with my friends. (I ended up giving up, but apparently not completely enough to prevent an endless stream of "friend" requests from showing up in my mailbox.)

Signing up for Google+ this week was different. It already knew who all my contacts were, no passwords required.

I'm not sure, in retrospect, which was more disconcerting. If FB signup raised my phishing defenses, joining G+ felt more like a cyber-Mafia shakedown. All that was missing from the exhaustive list of friends and loved ones was "... it would be a shame if something happened to these people."

I'd say to look for me there, but it seems you won't have to.

I'll be talking about computer security and cyberwar this morning live at 10am on WHYY-FM's otherwise excellent Radio Times show. For those who aren't up before the crack of noon, I'm told the show will also be repeated at 10pm as well as podcast online. (WHYY is the Philadelphia NPR affiliate).

If there is one area where the Web and Internet publishing is truly fulfilling its promise, it has to be the free and open availability of scholarly research from all over the world, to anyone who cares to study it. Today's academic does not just publish or perish, but does so on the Web first. This has made science and scholarship not only more democratic -- no journal subscriptions or university library access required to participate -- but faster and better.

And many of the most prominent scientific and engineering societies are doing everything in their power to put a stop to it. They want to get paid first.

I've written here before about the way certain major technical societies use regressive, coercive copyright policies to obtain from authors exclusive rights to the papers that appear at the conferences and in the journals that they organize. These organizations, rooted in a rapidly disappearing print-based publishing economy, believe that they naturally "own" the writings that (unpaid) authors, editors and reviewers produce. They insist on copyright control as a condition of publication, arguing that the sale of conference proceedings and journal subscriptions provides an essential revenue stream that subsidizes their other good works. But this income, however well it might be used, has evolved into an ill-gotten entitlement. We write scientific papers first and last because we want them read. When papers were disseminated solely in print form it might have been reasonable to expect authors to donate the copyright in exchange for production and distribution. Today, of course, this model seems, at best, quaintly out of touch with the needs of researchers and academics who no longer expect or tolerate the delay and expense of seeking out printed copies of far-flung documents. We expect to find on it on the open web, and not hidden behind a paywall, either.

In my field, computer science (the very field which, ironically, created all this new publishing technology in the first place), some of the most restrictive copyright policies can be found in the two largest and oldest professional societies: the ACM and the IEEE.

Fortunately, these copyrights have been honored mostly in the breach as far as author-based web publishing has been concerned. Many academics make their papers available on their personal web sites, a practice that a growing number of university libraries, including my own, have begun to formalize by hosting institution-wide web repositories of faculty papers. This practice has flourished largely through a liberal reading of a provision -- a loophole -- in many copyright agreements that allows authors to share "preprint" versions of their papers.

But times may be changing, and not for the better. Some time in January, the IEEE apparently quietly revised its copyright policy to explicitly forbid us authors from sharing the "final" versions of our papers on the web, now reserving that privilege to themselves (available to all comers, for the right price). I found out about this policy change in an email sent to all faculty at my school from our librarian this morning:

February 28, 2011To be fair to IEEE, the ACM's official policy is at least as bad. Not all technical societies are like this; for example, Usenix, on whose board I serve, manages to thrive despite making all its publications available online for free, no paywall access required.Dear Faculty,

I am writing to bring to your attention a recent change in IEEE's policy for archiving personal papers within institutional repositories. IEEE altered their policy in January from allowing published versions of articles to be saved in repositories, like ScholarlyCommons, to only allowing pre-published versions. We received no prior notice about this change.

As a result, if you or your students/colleagues publish with IEEE and submit papers to ScholarlyCommons, I am writing to ask that you PLEASE REFRAIN FROM UPLOADING ANY NEW PUBLISHED VERSIONS OF ARTICLES. It is unclear yet whether IEEE material uploaded prior to January already within ScholarlyCommons will need to be removed. Anything new added at this point, however, would be in violation of their new policy.

...

Enough is enough. A few years ago, I stopped renewing my ACM and IEEE memberships in protest, but that now seems an inadequate gesture. These once great organizations, which exist, remember, to promote the exchange and advancement of scientific knowledge, have taken a terribly wrong turn in putting their own profits over science. The directors and publication board members of societies that adopt such policies have allowed a tunnel vision of purpose to sell out the interests of their members. To hell with them.

So from now on, I'm adopting my own copyright policies. In a perfect world, I'd simply refuse to publish in IEEE or ACM venues, but that stance is complicated by my obligations to my student co-authors, who need a wide range of publishing options if they are to succeed in their budding careers. So instead, I will no longer serve as a program chair, program committee member, editorial board member, referee or reviewer for any conference or journal that does not make its papers freely available on the web or at least allow authors to do so themselves.

Please join me. If enough scholars refuse their services

as volunteer organizers and reviewers, the quality and prestige of these

closed publications will diminish and with it their coercive copyright

power over the authors of new and innovative research. Or, better yet,

they will adapt and once again promote, rather than inhibit, progress.

Until that changes, I'll confine my service to open-access conferences such as those organized by Usenix.

Update 4 March 2011: I'm told that some ACM sub-groups (such as SIGCOMM) have negotiated non-paywalled access to their conferences' proceedings. So conference organizers and small groups really can have an impact here! Protest is not futile.

Update 8 March 2011: A prominent member of the ACM asserted to me that copyright assignment and putting papers behind the ACM's centralized "digital library" paywall is the best way to ensure their long-term "integrity". That's certainly a novel theory; most computer scientists would say that wide replication, not centralization, is the best way to ensure availability, and that a

centrally-controlled repository is more subject to tampering and other mischief than a decentralized and replicated one.

Usenix's open-access proceedings, by the way, are archived through

the Stanford LOCKSS project. Paywalls are poor way to ensure permanence.

Update 9 March 2011: David A. Hodges, IEEE VP of Publication

Products and Services just sent me a

(for some reason in PDF format) "clarifying" the new policy. He confirms that IEEE authors are still permitted to post a pre-publication

version on their

own (or their employer's) web site, but are now (as of January) prohibited

from posting the authoritative "published" PDF version, which will be

available exclusively from the IEEE paywall. (You can read his note

here [pdf]).

Still no word on whether there's a reason for this policy change other than

the obvious rent-seeking behavior that it appears to be. According to this

FAQ [pdf],

the reason for the policy change is to "exercise better control over

IEEE's intellectual property". Which is exactly the problem.

Update 2 March 2011: There's been quite a response to this post; I

seem to have hit a high-pressure reservoir of resentment against these

anti-science publishing policies. But several people have written me defending ACM's copyright transfer in particular as being "not as bad", since authors are

permitted to post an "author prepared" version on their own web sites if

they choose. Yes, a savvy ACM author can prepare a special version and hack

around the policy.

But the copyright remains with ACM, and the authoritative reviewed final manuscript stays hidden behind the ACM paywall.

A couple of years ago Jutta Degener and I became the first people to solve James Randi's $1,000,000 paranormal challenge. We derived, from thousands of miles away, the secret contents of a locked box held in Randi's offices set up to test whether psychic "remote viewing" was possible. Not being actual psychics, we had to exploit a weak home-brewed cryptographic commitment scheme that Randi had cooked up to authenticate the box's contents rather than the paranormal powers he was hoping to test for, but we did correctly figure out that the box contained a compact disk. And being nice people, we never formally asked for the million bucks, although we did have a bit of fun blogging about the cryptologic implications of psychic testing, which you can read here.

Our feat of "psychic cryptanalysis" got a bit more attention than I had expected given that our earthly cryptographic abilities are anything but paranormal, but you never know where the Internet will take things. But I was even more surprised when someone recently sent me a link to this final exam from a contracts course at the Stetson University College of Law [pdf].

Now, my mother definitely didn't raise me to be a law school exam question, and yet there we are, playing a starring role in the question on the fourth page. I have no idea whether to be flattered or horrified, but for the record (especially in case the IRS is reading), we never asked for or received the million dollars. And I've definitely never been been to an Alaskan psychic's convention.

The one thing I'm sure of is that Prof. Jimenez (who I've never met) will be making a guest appearance on some exam of mine in the near future. In a perfect world, he might play a role in a question involving copyright infringement, defamation, and false-light privacy, but since I teach computer science, not law, something about operating systems will probably have to do instead.

Fair warning: If I give a talk --- at your conference, lecture series, meeting, whatever -- and you ask me for "a copy of my presentation" I'm probably going to refuse. It isn't personal and I'm not trying to be difficult. It's just that I have nothing that I can sensibly give you.

Many speakers these days make their visual aids available, but I don't. I don't always use any, but even when I do, they just aren't intended to be comprehensible outside the context of my talk. Creating slides that can serve double duty as props for my talk and as a stand-alone summary of the content is, I must confess, a talent that lies beyond the limits of my ability. Fortunately, when I give a talk I've usually also written something about the subject too, and almost all my papers are freely available to all. Unlike my slides, I try to write in a way that makes sense even without me standing there explaining things while you read.

"Presentation software" like PowerPoint (and KeyNote and others of that ilk) has blurred the line between mere visual aids and the presentations themselves. I've grown to loathe PowerPoint, not because of particular details that don't suit me (though it would be nice if it handled equations more cleanly), but because it gets things precisely backwards. When I give a talk, I want to be in control. But the software has other ideas.

PowerPoint isn't content to sit in the background and project the occasional chart, graph or bullet list. It wants to organize the talk, to manage the presentation. There's always going to be a slide up, whether you need it there or not. Want to skip over some material? OK, but only by letting the audience watch as you fast-forward awkwardly through the pre-set order. Change the order around to answer a question? Tough -- should have thought of that before you started. You are not the one in charge here, and don't you forget it.

When I give a talk, I like to rely on a range of tools -- my voice, hand gestures, props, live demos, and, yes, PowerPoint slides. I tend to mix and match. In other words, from PowerPoint's perspective, I'm usually using it badly, even abusively. I often ignore the slides for minutes on end, or digress on points only elliptically hinted at on the screen. When I really get going, the sides are by themselves useless or, worse, outright misleading. Distributing them separately would at best be an invitation to take them hopelessly and confusingly out of context, and at worst, a form of perjury.

Unfortunately, "PowerPoint" has become synonymous these days with "presentation", but I just don't work that way. Maybe you don't work that way either. There's no one-size-fits-all way to give a talk, or even a one-size-fits-me way. So when I'm asked for my slides, I must politely refuse and offer my papers as a substitute (an idea I owe to the great Edward Tufte).

Fortunately, I'm senior enough (or have a reputation for being cranky enough)

that I can usually get away with refusing. Sometimes, though, when pressed hard,

I'll give in and send

these slides [pdf].

Several people have thoughtfully suggested their favorite alternatives to PowerPoint

(Prezi seems to be the popular choice), which I'll certainly check out.

And for the record, yes, I know about (and use when I can) PowerPoint's

"presenter" mode, which improves control over the audience display.

Unfortunately, both alternative software and presenter mode, while

improvements, are at best unreliable, since they assume a particular

configuration on the projecting computer. It often isn't possible to

project from a personal laptop (especially in conferences run on tight schedules), leaving us at the mercy of whatever is at

the podium. And that often means PowerPoint in single-screen mode.

In any case, while there is certainly room for me to improve my mastery of

PowerPoint and its alternatives, this wouldn't solve the basic problem, which is that, in my case at least, my slides -- when I use them at all -- aren't the

content. They won't help you understand things much more than would

any of the other stuff I also happen to bring up on stage with me, like, say, my shoes (which you can't have, either). But

you're welcome to my papers.

Addendum 26 November 2010: This post sure has struck a (perhaps

dissonant) chord somewhere, especially for a long holiday weekend.

I'm grateful to all who've emailed, blogged, and tweeted.